bagging predictors. machine learning

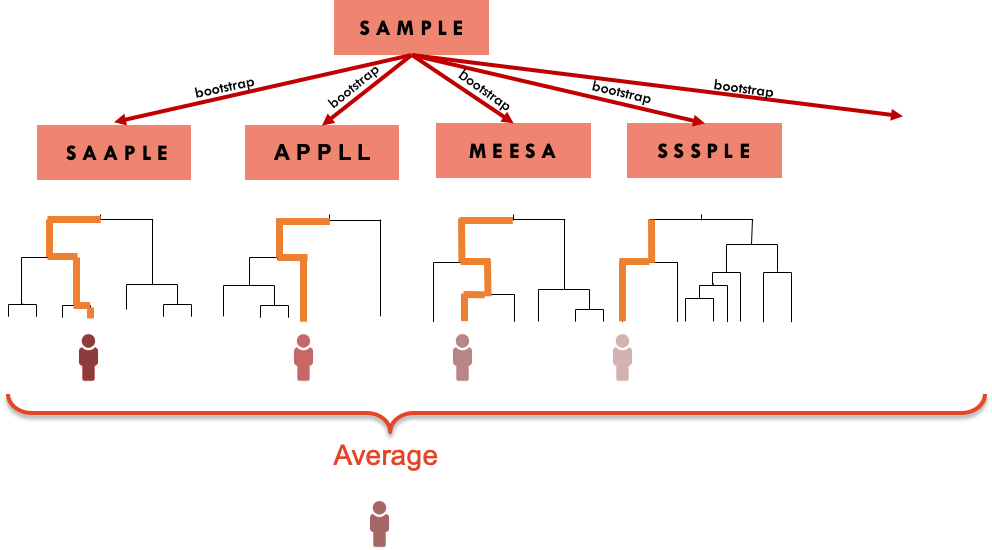

Bagging aims to improve the accuracy and performance of machine learning algorithms. Bagging method improves the accuracy of the prediction by use of an aggregate predictor constructed from repeated bootstrap samples.

Ensemble Learning Bagging And Boosting In Machine Learning Pianalytix Machine Learning

Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

. This sampling and training process is represented below. 3Both are able to reduce variance and make the final modelpredictor more stable. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

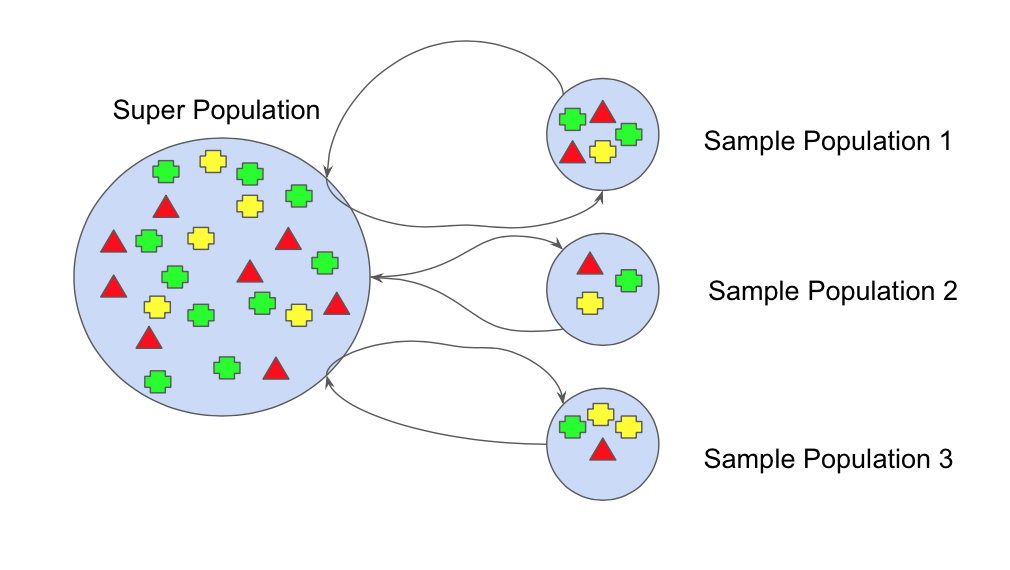

Both are trained data sets by using random sampling. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any. In bagging predictors are constructed using bootstrap samples from the training set and then aggregated to form a bagged predictor.

Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Customer churn prediction was carried out using AdaBoost classification and BP neural network techniques. According to Breiman the aggregate predictor therefore is a better predictor than a single set predictor is 123.

From this above diagram you can understand the basic difference between bagging and. If perturbing the learning set can cause significant changes in the predictor constructed then bagging can improve accuracy. When sampling is performed without replacement it is called pasting.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Each bootstrap sample leaves out about 37 of the examples.

To obtain the aggregate predictor the replicate data sets L B. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an.

1Both are using ensemble techniques. The results of repeated tenfold cross-validation experiments for predicting the QLS and GAF functional outcome of schizophrenia with clinical symptom scales using machine learning predictors such as the bagging ensemble model with feature selection the bagging ensemble model MFNNs SVM linear regression and random forests. In bagging a random sample of data in a training set is selected with replacementmeaning that the individual data points can be chosen more than once.

These left-out examples can be used to form accurate. The results show that the research method of clustering before prediction can improve prediction accuracy. Statistics Department University of California Berkeley CA 94720 Editor.

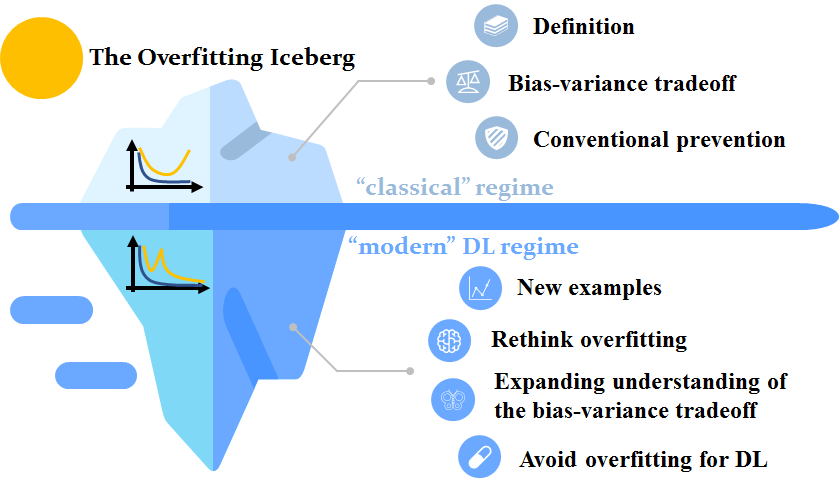

Methods such as Decision Trees can be prone to overfitting on the training set which can lead to wrong predictions on new data. After several data samples are generated these. Important customer groups can also be determined based on customer behavior and temporal data.

The multiple versions are formed by making bootstrap replicates of the learning set and using these as new learning. In machine learning we have a set of input variables x that are used to determine an output variable y. Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy.

Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston. The vital element is the instability of the prediction method. In other words both bagging and pasting allow training instances to be sampled several times across multiple predictors but only bagging allows training instances to be sampled several times for the same predictor.

Bagging predictors Machine Learning 26 1996 by L Breiman Add To MetaCart. Regression trees and subset selection in linear regression show that bagging can give substantial gains in accuracy. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

A relationship exists between the. If perturbing the learning set can cause significant changes in the predictor constructed then bagging can improve accuracy. The vital element is the instability of the prediction method.

Published 1 August 1996. Bootstrap Aggregation bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems. Manufactured in The Netherlands.

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Bagging And Pasting In Machine Learning Data Science Python

2 Bagging Machine Learning For Biostatistics

Machine Learning And Artificial Intelligence Python Scikit Learn And Octave

Confusion Matrix In Machine Learning Confusion Matrix Explained With Example Simplilearn Youtube

Reporting Of Prognostic Clinical Prediction Models Based On Machine Learning Methods In Oncology Needs To Be Improved Journal Of Clinical Epidemiology

Applications Of Artificial Intelligence And Machine Learning Algorithms To Crystallization Chemical Reviews

Overview Of Supervised Machine Learning Algorithms Bpi The Destination For Everything Process Related

An Introduction To Bagging In Machine Learning Statology

Ensemble Learning Algorithms Jc Chouinard

Bagging Classifier Instead Of Running Various Models On A By Pedro Meira Time To Work Medium

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

The Guide To Decision Tree Based Algorithms In Machine Learning

How To Use Decision Tree Algorithm Machine Learning Decision Tree Algorithm

4 The Overfitting Iceberg Machine Learning Blog Ml Cmu Carnegie Mellon University

Machine Learning Algorithms Used In Pse Environments A Didactic Approach And Critical Perspective Industrial Engineering Chemistry Research